Unsupervised learning is a type of ML where models are trained using un-labeled data to discover hidden patterns & insights from the data without any supervision. As a beginner in the field of machine learning, understanding unsupervised learning techniques can be a bit overwhelming. To help you get started, we’ve created this ultimate cheat sheet that covers the most common unsupervised learning algorithms and their applications.

Table of Contents

Introduction

Unsupervised Learning is a type of machine learning technique where the algorithm learns from unlabeled data without any predefined outputs or target variables. Unlike supervised learning, where the algorithm is trained on labeled data to predict a specific outcome, unsupervised learning algorithms find patterns and relationships within the data on their own.

The goal of unsupervised learning is to find the underlying structure of a dataset, group similar data points, and represent the dataset in a compressed format. It can be likened to how humans learn new things based on their own experiences, without direct instruction.

- Use Cases:

- Unsupervised learning is helpful for extracting useful insights from data.

- It closely mimics how real AI might learn independently.

- It works with unlabeled and uncategorized data, making it essential for scenarios where input data lacks corresponding output labels.

- Working Process:

- Input Data: Start with an unlabeled dataset—data that lacks predefined categories or labels.

- Pattern Discovery: The machine learning model interprets the raw data to uncover hidden patterns.

- Algorithm Application: Suitable algorithms (such as k-means clustering, decision trees, etc.) are applied to group data objects based on similarities and differences.

- Representation: The algorithm represents the dataset in a more manageable format.

- Example:

- Imagine an unsupervised learning algorithm given a dataset containing images of various cats and dogs.

- The algorithm hasn’t been trained on this specific dataset and doesn’t know the features of the images.

- Its task is to identify image features independently.

- By clustering the image dataset based on similarities, the algorithm discovers patterns and groups the images accordingly.

Unsupervised Learning: Algorithms

Clustering Algorithms

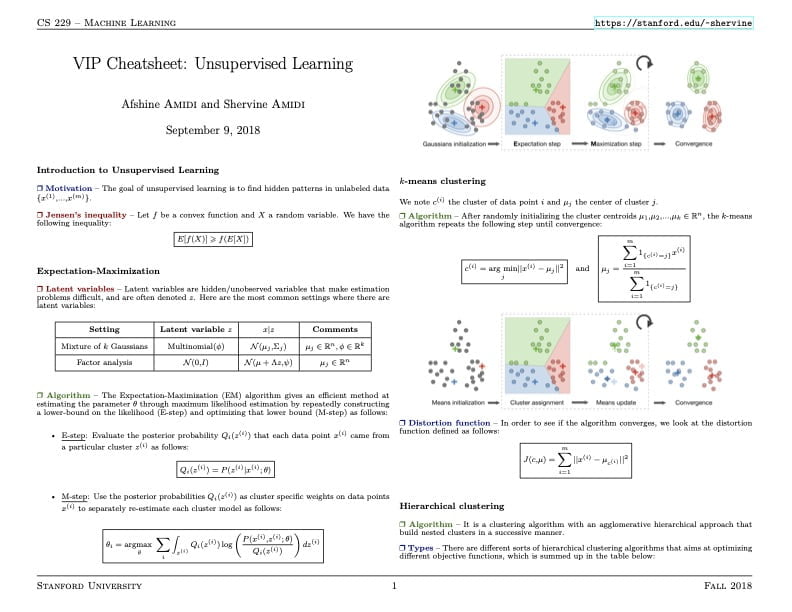

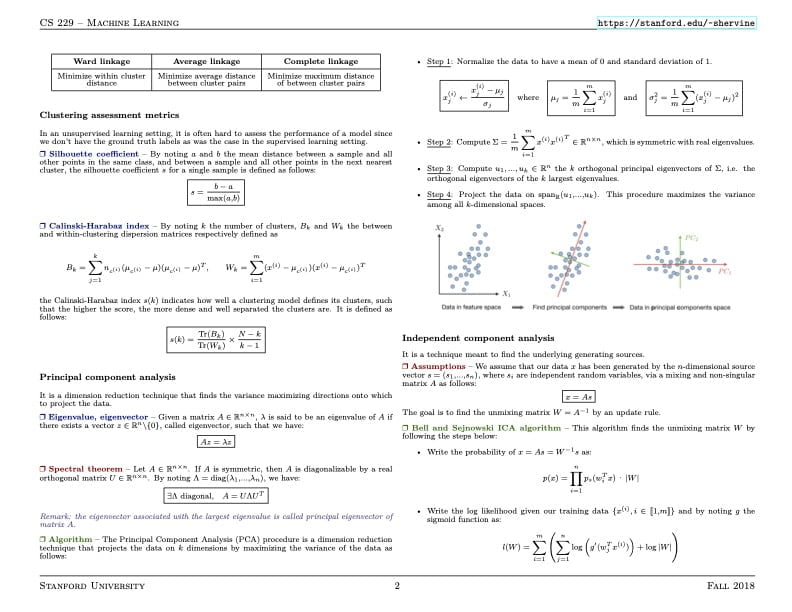

1. K-Means Clustering

- Divides the data into K clusters according to similarity

- Assigns data points to the closest cluster centroid recursively

- Works well for finding spherical clusters

- Sensitive to initial centroid positions and outliers

2. Hierarchical Clustering

- Builds a hierarchy of clusters

- Can be agglomerative (bottom-up) or divisive (top-down)

- Generates a dendrogram for visualizing the clustering process

- Useful for data with varying cluster sizes and densities

3. DBSCAN (Density-Based Spatial Clustering of Applications with Noise)

- Identifies clusters based on density

- Marks outliers and can handle arbitrary shapes

- Requires tuning of two parameters: epsilon (radius) and minPts (minimum points)

- Suitable for discovering clusters of varying densities

4. KNN (k-Nearest Neighbors)

- A non-parametric, instance-based learning algorithm

- Used for both classification and regression tasks

- Works by finding the k closest data points (neighbors) to a new data point

- For classification, it assigns the majority class label among the k neighbors

- For regression, it takes the average or median of the k neighbors’ values

Dimensionality Reduction Techniques

5. Principal Component Analysis (PCA)

- Transforms high-dimensional data into a lower-dimensional space

- Locates the primary components of the data that best capture its variation.

- Useful for data visualization and feature extraction

- Can be applied to other algorithms as a preprocessing step.

6. t-SNE (t-Distributed Stochastic Neighbor Embedding)

- Visualizes high-dimensional data in a low-dimensional space

- Preserves local structure and clustering patterns

- Particularly effective for visualizing complex, non-linear data

- Can be computationally expensive for large datasets

7. Independent Component Analysis (ICA)

- A technique for separating a multivariate signal into independent non-Gaussian signals

- Assumes that the observed data is a linear mixture of independent components

- Finds a linear transformation that maximizes the non-Gaussianity of the components

- Useful for blind source separation problems, such as separating audio signals or removing artifacts from images

- Can be seen as an extension of PCA, but with the additional assumption of non-Gaussianity

8. Singular Value Decomposition (SVD)

- A matrix factorization technique that decomposes a rectangular matrix into three matrices

- Given a matrix A, SVD factorizes it as: A = UΣV^T

- U and V are orthogonal matrices, and Σ is a diagonal matrix of singular values

- The singular values in Σ represent the importance of each dimension

- By keeping only the top k singular values, SVD can be used for dimensionality reduction

Association Rule Learning

9. Apriori Algorithm

- Discovers frequent itemsets and association rules in transaction data

- Generates rules based on support and confidence measures

- Used in market basket analysis and recommender systems

- Computationally expensive for large datasets

10. FP-Growth (Frequent Pattern Growth)

- An improved version of the Apriori algorithm

- Builds a compact data structure (FP-Tree) to store frequent itemsets

- More efficient than Apriori for large datasets

- Suitable for mining association rules and frequent patterns

Applications of Unsupervised Learning

- Clustering: Customer segmentation, anomaly detection, image segmentation

- Dimensionality reduction: Data visualization, feature extraction, noise removal

- Association rule mining: Market basket analysis, recommender systems, web usage mining

If you want to learn more about top machine learning algorithms with their pros and cons then click here

Unsupervised Learning: Cheat Sheet

Summary

In summary, unsupervised learning allows models to explore data autonomously, making it a powerful tool for uncovering hidden structures and insights. This cheat sheet covers the most commonly used unsupervised learning algorithms and techniques. As a beginner, understanding the underlying concepts and applications of these algorithms will provide a solid foundation for further exploration in the field of unsupervised learning.

Remember, practice and hands-on experience are key to mastering these techniques. Happy learning!

Learn more about machine learning and other topics

- Supervised Learning

- Machine Learning: A Quick Refresher and Ultimate Cheat Sheet

- Machine Learning Algorithms: How To Evaluate The Pros & Cons

- Data Science Cheat Sheets

- Probability and Statistics

- Deep Learning Cheat Sheets

- Unsupervised Learning by Google