Deep learning teaches computers to process data similar the way human brain learns. It recognizes complex patterns in various data to produce accurate insights & predictions.

Table of Contents

Introduction

In the rapidly evolving field of deep learning, staying up-to-date with the latest techniques, architectures, and best practices can be a daunting task. That’s where a comprehensive deep learning cheat sheet comes into play, serving as a valuable resource for both novice and experienced practitioners alike.

What is Deep Learning?

Deep learning has revolutionized the field of artificial intelligence, enabling machines to perform tasks that were once considered exclusive to human intelligence. However, the complexity of deep learning algorithms and architectures can be overwhelming, especially for those new to the field. This is where a deep learning cheat sheet comes in handy, serving as a concise reference guide to the essential concepts, techniques, and best practices.

- Artificial Neural Network Architecture serves as its foundation.

- It allows computers to learn from data by discovering intricate patterns and relationships.

- Unlike traditional programming, deep learning doesn’t require explicit rules; it learns directly from examples.

- The popularity of deep learning has surged due to advances in processing power and the availability of large datasets.

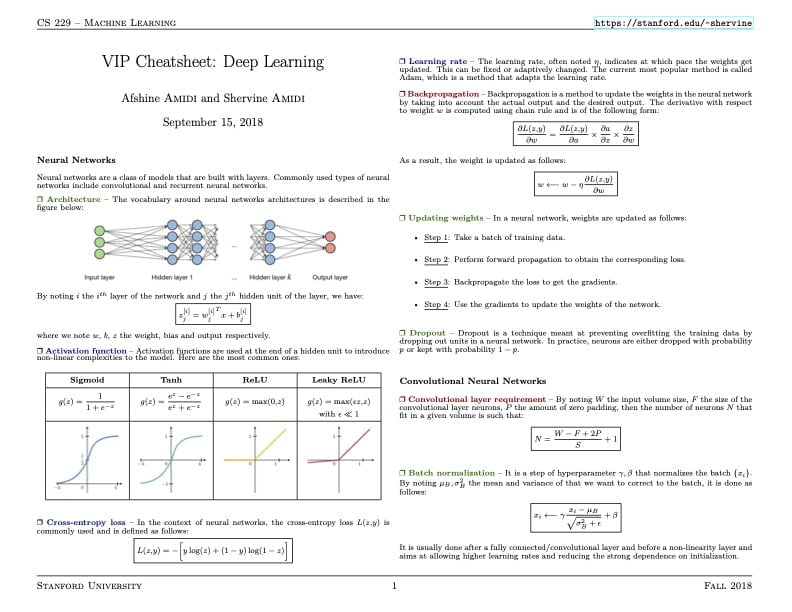

Neural Networks and Layers

- In a fully connected deep neural network:

- Input layer: Receives data.

- Hidden layers: Process and transform data through nonlinear transformations.

- Output layer: Produces the final result.

- Deep neural networks have multiple interconnected layers, allowing them to learn complex representations.

Below are some fundamental techniques, functions and algorithms used in neural network architectures

Activation Functions

Neural networks may learn complicated patterns because of the non-linearity that activation functions introduce into the system. Below are the most commonly used activation functions that are utilized in deep learning applications

- Sigmoid

- ReLU (Rectified Linear Unit)

- Tanh

- Softmax

Loss Functions

Loss functions measure the discrepancy between the predicted output and the true output, guiding the training process. Below are various loss functions that you can utilize in deep learning project

- Mean Squared Error (MSE)

- Cross-Entropy Loss

- Hinge Loss

- Focal Loss

Optimization Algorithms

Optimization algorithms are responsible for updating the model’s parameters during training. Below are some popular optimization techniques are mentioned, learn more about their strengths, and when to use them.

- Stochastic Gradient Descent (SGD) : It updates the model’s parameters in the direction of the negative gradient of the loss function with respect to the current batch of training data

- Momentum: Momentum is an extension of SGD that introduces a momentum term to accelerate convergence and help the optimizer escape local minima

- RMSprop-Root Mean Square Propagation : is an adaptive learning rate optimization algorithm. A running average of the magnitudes of the most recent gradients for each parameter is used to divide the learning rate for that parameter.

- Adam-Adaptive Moment Estimation : It combines the benefits of Momentum and RMSprop by incorporating momentum and adaptive learning rates.

Regularization Techniques

Regularization techniques are employed to prevent overfitting and improve the generalization capability of deep learning models. Below are various regularization methods that you can utilize while working on solving any problem.

- L1 and L2 Regularization

- Dropout

- Early Stopping

- Data Augmentation

Visualization and Interpretation

Understanding the inner workings of deep learning models is crucial for debugging, interpreting results, and gaining insights. Below are some visualization and interpretation techniques that provide a window into the model’s decision-making process.

- Activation Maximization

- Saliency Maps

- Layer Visualization

- Dimensionality Reduction (t-SNE, UMAP)

Applications of Deep Learning

To illustrate the versatility of deep learning, below are the practical applications across various domains, including computer vision, natural language processing, speech recognition, recommender systems, and generative models.

- Computer Vision (CV) : Deep learning powers image classification, object detection, and facial recognition.

- Natural Language Processing (NLP): Used in chatbots, language translation, and sentiment analysis.

- Speech Recognition: Enables voice assistants and transcription services.

- Recommendation Systems: Personalized recommendations in e-commerce and content platforms.

- Generative Adversarial Networks (GANs) : a type of deep learning architecture that consists of two neural networks ( Generator and Discriminator ) competing against each other in a game-theoretic framework

Popular Deep Learning Architectures

- Convolutional Neural Networks (CNNs): Best for image-related works

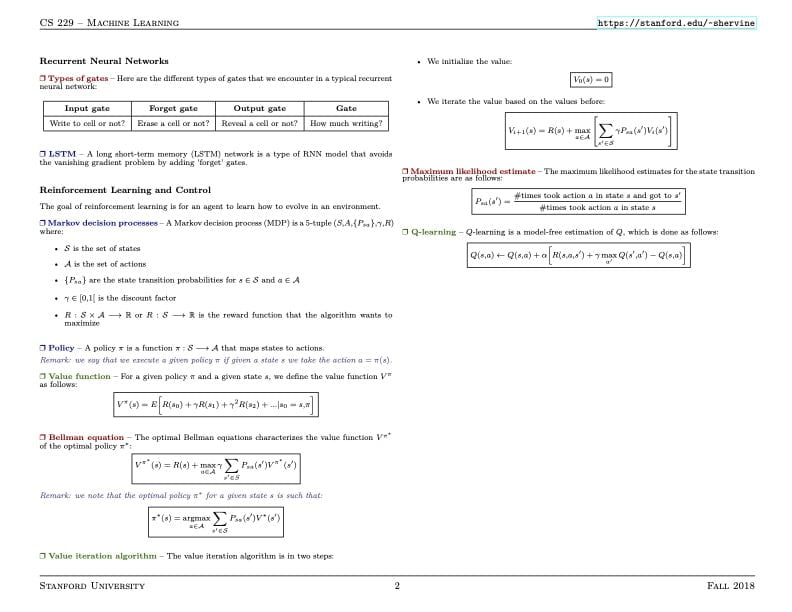

- Recurrent Neural Networks (RNNs): Good for sequential data (like time series and text).

- Deep Belief Networks (DBNs): Used for unsupervised learning and feature extraction works.

- Feedforward Neural Networks (FNNs)

- Long Short-Term Memory (LSTM)

- Transformer Models (BERT, GPT)

Challenges and Resources

- For training a deep neural network effectively, a substantial amount of data and computational resources required.

- Cloud computing and specialized hardware (like GPUs) can help eased these process.

Best Practices

A set of best practices for training and deploying deep learning models, covering topics such as data preprocessing, hyperparameter tuning, transfer learning, and model deployment strategies.

- Data Preprocessing

- Hyperparameter Tuning

- Transfer Learning

- Model Deployment

Deep Learning Cheat Sheet

Summary

In summary, deep learning empowers machines to learn complex concepts by building them from simpler ones. It’s a powerful tool with applications across various domains!

The deep learning cheat sheet is a comprehensive yet concise resource that aims to demystify the complexities of deep learning. By providing a centralized reference for essential concepts, architectures, techniques, and best practices, this cheat sheet empowers practitioners to navigate the ever-evolving landscape of deep learning with confidence and efficiency.