Calculus is the mathematical study of continuous change and algebra is the study of generalizations of arithmetic operations.

Table Of Content

Introduction

Algebra stands as one of the oldest branch of mathematics. It helps us represent relationships and solve equations in various fields like engineering, physics, and computer science and it deals with:

- Equations: Forming and manipulating mathematical expressions using variables, constants, and operators.

- Unknown quantities: Solving for variables to make equations equal.

- Basic properties of numbers: Commutative and associative laws, identities, inverses.

Calculus focuses on rates of change and continuous variation. It helps analyze motion, growth, and complex systems. Differential calculus deals with derivatives, while integral calculus deals with integrals. Key concepts includes in calculus are

- Derivatives: Instantaneous rates of change (slope of a curve).

- Integration: Finding areas under curves.

- Limits: Evaluating behavior as variables approach specific values.

How Machine Learning Use Algebra & Calculus

Let’s explore the mathematical foundations of machine learning, including algebra and calculus:

- Linear Algebra:

- Linear algebra is fundamental in machine learning. It deals with vectors, matrices, and linear equations.

- Key concepts:

- Vectors: Represent quantities with both magnitude and direction.

- Matrices: Organize data and transformations.

- Matrix operations: Addition, multiplication, inversion, and transposition.

- Eigenvalues and eigenvectors: Used in dimensionality reduction techniques.

- Linear algebra is crucial for understanding neural networks, optimization, and data transformations.

- Calculus:

- Calculus plays a vital role in machine learning algorithms. Here’s why:

- Gradient Descent: Calculus helps optimize model parameters by finding the steepest descent direction.

- Backpropagation: In neural networks, derivatives (calculus) guide weight updates during training.

- Rate of Change: Calculus enables us to minimize complex objective functions.

- Partial derivatives: Used in optimization algorithms.

- Understanding derivatives, integrals, and rates of change is essential for mastering machine learning.

- Calculus plays a vital role in machine learning algorithms. Here’s why:

- Statistics:

- While not directly algebra or calculus, statistics is another critical component.

- Concepts include:

- Mean, standard deviation, and variance.

- Hypothesis testing, confidence intervals, and correlation.

- Estimation methods (maximum likelihood, method of moments).

- Statistics guides model evaluation, feature selection, and understanding uncertainty.

Remember, a solid grasp of these mathematical concepts empowers you to build and interpret machine learning models effectively!

Algebra-Calculus: Key Terms To Understand

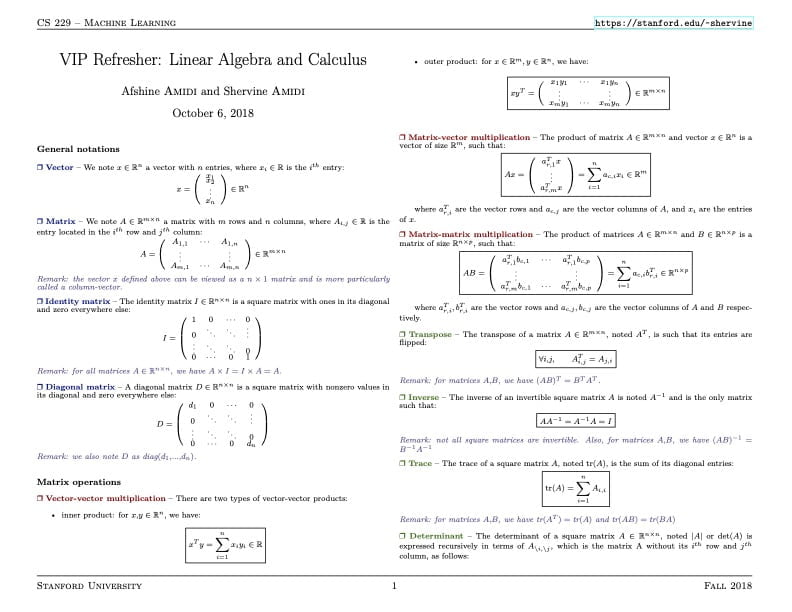

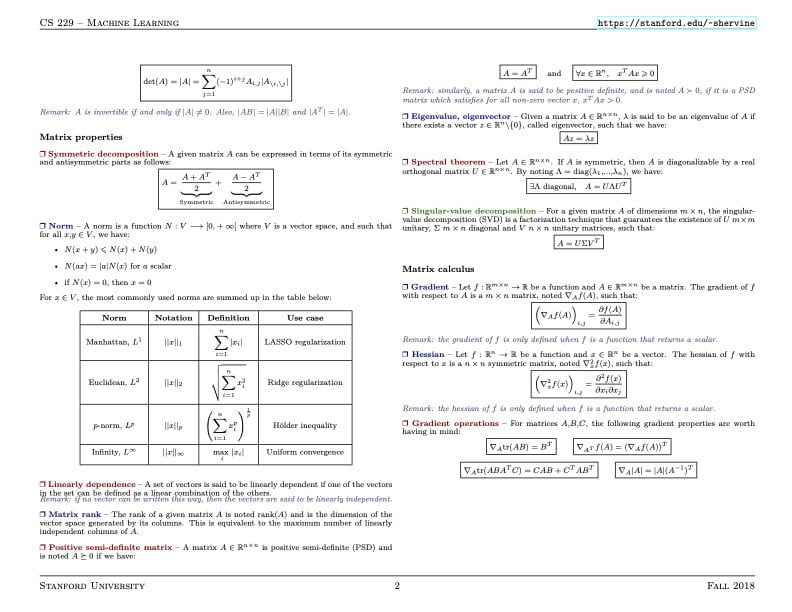

Vectors

A vector is a fundamental mathematical object used to represent quantities with both magnitude and direction. It can be thought of as an ordered list of numbers, either arranged in a row vector (horizontal) or a column vector (vertical).

Matrices

A matrix is a two-dimensional array of numbers (or other elements) organized into rows and columns. It can represent various data structures, transformations, and systems of equations. Matrices are usually denoted by uppercase letters (e.g., A, B, C).

- Matrix Properties:

- Dimension: The number of rows and columns in any matrix determines its dimension. Eg. a 2×3 matrix will have 2 rows and 3 columns.

- Entries: Each single element or numbers within a matrix are called entries or elements.

- Equality: Two matrices are equal if all their corresponding elements are equal.

- Zero Matrix: Any matrix with all of its elements are equal to zero is called a zero matrix.

- Square Matrix: Any matrix with the equal number of rows and columns is called as square matrix(e.g., 3×3).

- Identity Matrix: An square matrix with all ones on the diagonal and zeros elsewhere is called as identity matrix(e.g., I).

- Operations:

- Addition/Subtraction: Matrices of the same dimensions can be added or subtracted element-wise.

- Scalar Multiplication: Multiply a matrix by a scalar (single number).

- Matrix Multiplication: Combine rows and columns to create a new matrix.

- Transpose: Swap rows with columns (e.g., transpose of matrix A denoted as A^T).

- Matrix Type: Some of matrix types are

- Identity (Unit) Matrix: A square matrix with ones on the diagonal and zeros elsewhere.

- Diagonal Matrix: An square matrix where all of its non-diagonal elements are zero is called as diagonal matrix.

- Singleton Matrix: A matrix having only one element is a singleton matrix.

- Symmetric Matrix: An square matrix where condition a_{ij} = a_{ji} fulfilled for all elements.

Eigenvalues

- An eigenvalue of a square matrix A is a scalar (a single number) denoted by λ.

- It satisfies the equation: Av = λv, where v is a nonzero vector (the eigenvector) associated with that eigenvalue.

- Eigenvalues quantify how a matrix stretches or compresses space along specific directions.

- They are essential for understanding transformations, stability analysis, and system behavior.

Eigenvectors

- Eigenvectors represent the directions in which a matrix transformation only scales (stretches or shrinks) the vector without changing its direction.

- An eigenvector of matrix A is a nonzero vector v such that Av = λv for some scalar λ.

- They are orthogonal (perpendicular) to each other if the matrix is symmetric.

- Eigenvectors are used in various fields, including physics, computer graphics, and data analysis.

Algebra-Calculus Cheat Sheet

Summary

In summary, algebra manipulates equations and unknown quantities, while calculus studies rates of change and instantaneous behavior in functions. Both play crucial roles in mathematics and applied sciences!

Learn more about other or related topics

- Data Science Cheat Sheets

- Probability and Statistics

- Deep Learning Cheat Sheets

- Calculus by Wikipedia

- Algebra by Wikipedia